Bank of America just raised its EUR/USD forecast

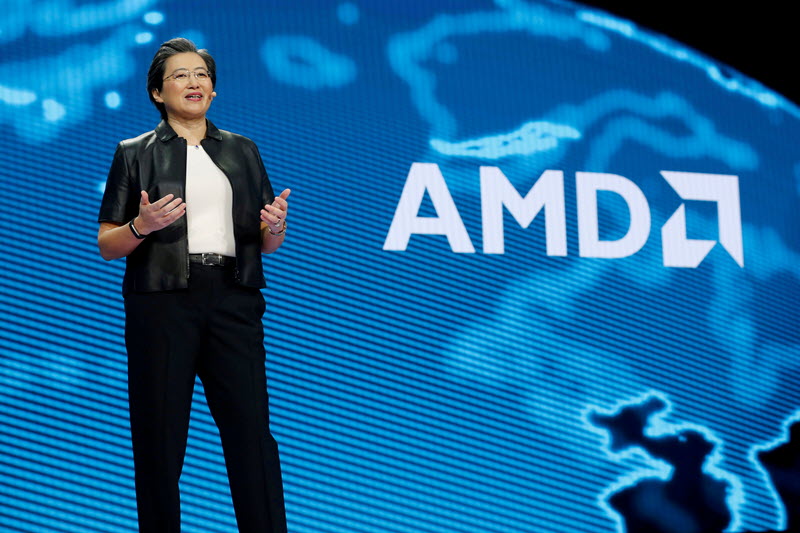

On Wednesday, 28 May 2025, Advanced Micro Devices (NASDAQ:AMD) presented at TD Cowen’s 53rd Annual Technology, Media & Telecom Conference 2025. The company outlined its strategic focus on artificial intelligence (AI) and financial performance. While AMD expressed optimism about its growth prospects and product roadmap, concerns about geopolitical tensions and market fluctuations were also addressed.

Key Takeaways

- AMD is heavily investing in AI, with significant advancements in its GPU lineup, notably the MI300, MI350, and MI450.

- The company reported a 68% year-over-year growth in client revenue in Q1, driven largely by average selling price (ASP) gains.

- AMD anticipates improved gross margins in the latter half of the year, despite challenges in the data center GPU segment.

- Export restrictions have impacted planned revenue, particularly in China, but AMD remains focused on inventory management and revenue share gains.

- The company is optimistic about its competitive positioning and expects AI to drive higher computation demand.

AI Strategy and Product Roadmap

- AMD is focusing on generative AI, emphasizing rapid innovation and scaling challenges.

- The MI300 series generated $5 billion in revenue in its first year, with the MI350 offering 35 times performance improvements while maintaining compatibility.

- The MI450 is set for release in 2026, designed for massive scaling and demanding training workloads.

- Software development is accelerating with bi-weekly Rock’em releases to enhance performance.

- AMD’s hyperscaler focus includes partnerships with Meta, Microsoft Azure, and Oracle.

- The ZT Systems acquisition strengthens RackScale design and manufacturing capabilities.

Financial Results

- Client revenue saw a substantial 68% increase year-over-year in Q1, with ASP gains contributing two-thirds of this growth.

- Despite a sequential decline in Q1 units, sell-through exceeded sell-in in the desktop business.

- Gross margins are expected to expand in the latter half of the year, driven by product mix improvements.

- Export restrictions led to the removal of $1 billion in planned revenue for MI308 shipments to China.

Operational Updates

- AMD is prioritizing revenue share gain, focusing on performance, battery life, and AI capability in notebooks.

- The company is managing business fluctuations due to geopolitical factors and tariffs.

- A larger-than-expected client business and rebuilding inventory in the console sector are key operational focuses.

Future Outlook

- AMD is confident in its ability to compete in the AI market, with a strong product roadmap and strategic partnerships.

- The company anticipates a market shift back toward CPU investment and robust growth in commercial PCs.

- AI is expected to drive overall higher demand for computation across various sectors.

For a detailed understanding, readers are encouraged to refer to the full transcript.

Full transcript - TD Cowen’s 53rd Annual Technology, Media & Telecom Conference 2025:

Josh, Interviewer, TD Cowen: Good morning, everybody. Welcome. This is the first event at TD Cowen’s fifty third, got it right, annual TMT conference. Really pleased to be kicking it off with Mark Papermaster, CTO of AMD, and Matt Ramsay, VP of IR and and financial strategy. Gentlemen, thank you for joining us.

And Matt, welcome back.

Matt Ramsay, VP of IR and Financial Strategy, AMD: Thank you.

Josh, Interviewer, TD Cowen: Mark, you have a unique vantage being a key decision maker at a very important technology company at a time when it seems like technology has never been more important. I mean, if we reflect back two years ago, no one was talking about generative AI. Maybe to start big picture, what are the key problems that you and your customers are trying to solve for today? And what are you most excited about to be working on?

Mark Papermaster, CTO, AMD: Well, Josh, it’s great question to get us started at the fifty third Kennedy Cowen event here, which is pretty awesome. Congratulations. Look, it has really never been a more exciting time in computing. And the reason is, just as you said, it’s only been two point five years since generative AI took off. I don’t know about you all, but I remember hearing the buzz and getting on and just starting asking questions, putting prompts in and just sort of being amazed at just the depth of knowledge base that were in these models that was able to relay back to you as an individual.

But it was still a novelty at that time. And when you think about what’s happened over the last two years, gone from novelty to people starting to figure out how to take generative AI and now agentic AI, reasoning, as we have expanded capabilities and how to really apply them. And so it’s brought us to a point where computing has never been more important in terms of its ability to impact our lives, our personalized and certainly in a dramatic way, our business processes. So the challenge is scale. It’s absolutely scale.

It’s how, to be on a faster pace of innovation than we and our peers in the industry have ever been on and how to drive technology forward. This AI will be embedded everywhere. So it is in everything from supercomputers to largest data centers in all of your PCs. If they’re not already, they’ll be AI enabled. And you saw the latest OpenEye investment to have really take a serious run at a wearable where just even how you get at your AI is simpler and simpler.

So very, very exciting times, incredibly pivotal time.

Josh, Interviewer, TD Cowen: Awesome. Thank you for that. And I mean, when you talk to semis investors, they’re perpetually worried about digestion and overinvestment. But when you talk to you and listen to you speak and listen to technology companies, it’s all about more and solving problems. I mean, how are your conversations with your customers?

I mean, is there any concern of overbuilds? Or is it all about, again, trying to solve problems?

Mark Papermaster, CTO, AMD: Yeah. You know, there is a huge investment people made. And you think about the last just literally last twelve months, there’s just been a huge ramp of investment, twelve to eighteen months, in building up AI capability. And no one could afford to be left behind. So there has been a big capacity buildup.

But now what you’re seeing is a rationalization. What most businesses are doing, the CIOs I talked to, they’ve run their proof of concepts. They’ve done they’ve gotten the low hanging fruit of how they’re applying AI and they now have a plan. They’re targeting which areas they want to go after to dramatically improve their productivity. And what they’re also realizing is that, hey, wait a minute, the investment can’t just be on the AI and building up that capability.

My old workloads didn’t go away. I still have to refresh my server farm because I’m running all my payroll. I’m running all of my CRM. I’m running all the applications which are not massively parallel like you have in a generative AI application. They’re best suited for an x86 CPU which is where we’re going to accelerate absolute best total cost of ownership.

So that investment is coming back. There’s also a refresh cycle with Windows 11 on PCs. So I think everyone’s looking much more lucidly at the broader compute landscape and realizing, yes, they’re going to be growing their AI capabilities year after year, particularly as inference starts to take off. I’m sure we’ll talk more about that later. But they’re also thinking much more, as I said, loosely about their broad IT needs because it turns out AI is just is driving overall a much higher demand for computation.

Josh, Interviewer, TD Cowen: So I want to dive into AMD’s specific role in that AI story for the industry. I think given all the attention that’s put on it, we forget that MI 300 and MI three twenty five are AMD’s really first explicit data center accelerator parts. You’re close to launching, you’re officially launching your MI three fifty five part. Where are we in the maturation of your accelerator franchise? What did you learn on three hundred and three twenty five?

And what are the next steps as you roll out three fifty five and then 400, four 50 next year?

Mark Papermaster, CTO, AMD: That’s a great question. And it’s really interesting when you look at our journey in AI because people think, okay, we showed up in December of twenty twenty three, there’s the MI-three hundred. We had a great ramp last year, fastest ramp of any product ever in AMD’s history. It went from virtually zero in twenty twenty three of revenue to $5,000,000,000 last year. So it was indeed our first step into dedicated AI GPU for the data center.

But our journey was over literally a decade because while we were focused on getting leadership CPUs out that drove the key catalyst of the turnaround of AMD, we had been investing in GPUs for compute. We had won federal grants to drive how CPUs and GPUs could work best together. That led to the win for AMD, AMD CPU and GPU and what is now the world’s number one and number two supercomputer. So we’ve been the top supercomputer for three years now running. And so it was those seeds that then drove our software investment, just like NVIDIA first developed CUDA for high performance computing in National Labs and they extended to AI, we did the same.

So we took that AI stack for HPC. And if you think about what we did last year, we hardened it. As MI 300 came up and went into production across major hyperscale installations. And so how you say, how do you think about our roadmap going forward? We’re committed to a very, very fast cadence of new products.

We’re on actually a year, year plus kind of cadence in getting products out just like our, know, the lead dominant player in the GPU industry. And what you’ll see us do is repeat what we did in CPU for servers or CPU and APUs that we have in the PC and embedded market. And that is we’re going to come out with great advantages every product cycle and we’re going to win on bringing total cost of ownership advantage, but also innovation and also key partnerships. So you look at the MI three fifty family that we’ll be sharing a lot more detail on, on June 12. We have an event called Advancing AI in San Jose.

And what we’ll talk about is how we stay in the exact same universal baseboards, so the same infrastructure standard that we’re in today, yet we bring 35x And that’s with design changes, that’s with optimized math formats to get you more efficient in inferencing and more. So that’s the next translation and we’re well on our design of the MI450 family. So that’s a 2026 deliverable. Again, it’s going to be significant performance improvements, but it’s also going right after massive scaling.

So it’ll be able to take on even the largest training workloads.

Josh, Interviewer, TD Cowen: And you guys have been clear that for the Instinct family, feel you can best compete in inference first and then training longer term. Can you talk about the broadening of sort of the inference workloads and customers that you’re able to service? And how in particular, again, I realize you have the event coming up, so there’s only so much you could say. How three fifty could potentially broaden your engagements given what technically you’re going to be able to bring on this the universal baseboard?

Mark Papermaster, CTO, AMD: Yes. So when you think about where we are today, how do we get those gains on MI three hundred and three twenty five? It was inferencing. That’s where we brought advantage. We designed in more memory.

We had the world’s best experience in chiplet technology. We leveraged that with MI300. So we had an eight high stack of high bandwidth memory and that is an immediate silicon proximity, silicon to silicon connectivity to our GPU and IO complex. Right? And so it was incredibly efficient inference engine.

In fact, to the point when you think about DeepSeq coming out, people realize right away, my God, I can run DeepSeq on one AMD GPU, it takes two of the competitor. So we really leverage technology to get that inference advantage. We have benchmarks out there with MLPerf, again the DeepSeq, LAM results out there that show that advantage. MI350 will continue the same. Again, easy to adopt from an infrastructure standpoint but we’re going to keep that memory advantage.

We’re going to keep a throughput advantage. We couldn’t be more excited about what MI350 will be doing. And it also will start us down the training path. So what we’ve done is enhance for MI350 our networking capability. So we had invested in Pensando.

And so Pensando is our AMD Pensando team has created an AI network interface chip, which is finely tuned to accelerate our MI, our AMD Instinct platforms. And as well, we partnered with the industry. This is our forte at AMD. And so what you’ll see is different kind of configurations, different networking solutions, different OEMs providing tailoring that you need for your workloads. And that applies of course on hyperscalers.

Hyperscalers are going to invest and they’re going to have a very tailored design. But it turns out enterprise equally has such a breadth of inference and application. And they’re all deciding right now how they’re going to start deploying their inferencing. What’s that combination of on prem? What do I run-in the cloud?

Typically on prem is more of their just base day after day inferencing applications. Yet when they need to tune a model, they’re to do some training. And MI350 starts us down that training path. We’ll build clusters of thousands of GPUs, not hundreds of thousands, but thousands of GPUs that we’ve been built in clusters around MI three fifty. And then again, MI four fifty, full scalability for even the most demanding training workloads.

Josh, Interviewer, TD Cowen: I wanted to follow-up on that last point on enterprise. I think we’ve traditionally thought of enterprises AI forays as going through cloud vendors. It seems like, from speaking with you the last couple of days, that you’re seeing more pull. Or maybe it’s just at the point where there’s enough capacity where the enterprise customers can be serviced. But what are the sort of applications where you envision direct engagements with enterprise customers?

How meaningful can that be? Because we’re all focused on the big checks from the hyperscale vendors. But I’d be curious to hear your thoughts on enterprise AI adoption as well.

Mark Papermaster, CTO, AMD: Well, there’s really people underestimate, I think, the impact of DeepSeek. Because what DeepSeek showed is that you could create an open model, but you could also bring innovations that allowed more efficiency. And so it didn’t everything doesn’t have to be run on billion and many billions, hundreds of billions and trillion parameter large language models. When you focus tasks, which enterprise typically is doing, they’ve got specific agentic tasks they’re doing for their company to really speed their productivity, they’re going to be able to focus more in their enterprise applications. And so you’re seeing CIOs and heads of infrastructure start to hone their strategy.

No surprise, it’s sort of landing what they’ve been doing for years on their CPU compute, Meaning, it’s landing on a predominant hybrid model. They’re running on prem where it’s just more both cost efficient or the data they have frankly the models they have, the weights they have that’s trained on their proprietary data, they don’t want to leave the premise. And so they’re making that investment to run-in their controlled IT, yet they’re still hybrid. They’re running on clouds where they need large compute clusters. You don’t run those constantly.

You run those when fine tuning your models that you’re running. And so they’re leveraging the cloud for that as well as bursting to the cloud, again, just like you see in CPU operations. One example I’ll give you is health sciences, so drug discovery. When I talk to companies in this field, they are getting vast improvements in their time to discovery and their time to market of new drugs and new treatments. But it’s incredibly proprietary with what they had.

Their data is gold. It’s not that you can’t trust the cloud, of course you can. And we’ve as an industry tackled those challenges and we’ve leaned in an AMD with confidential compute in a huge way which gives you even more trust in the cloud. But what they want is complete control over their crown jewels. And so at this stage of the game, we’re going to see that play out in a number of industries.

Josh, Interviewer, TD Cowen: So some of the earlier feedback on your Instinct family was that the software needed work and maturity. Enterprise seems like it’s amongst the harder challenges to solve for from a software standpoint. You’ve moved to biweekly releases on Rock’em. I’ve asked Matt directly, like how do I know those biweekly releases are good. How should we as investors from the outside looking in judge your and analyze the capabilities and maturation of your software stack?

Yeah.

Mark Papermaster, CTO, AMD: That’s a great question. And the first thing I want to do is just sort of share a context that may be helpful to you. It’s called it’s a reality context. And that is, as I said, we were working on the ROCCM stack for years, but we went to production in December 2023. So we had 2024, no surprise to you, the focus was on really hardening that stack, making sure that it ran flawlessly that people could of course just bank their business on that.

So we went to full production level at Meta. If you look at many of the Meta properties that you run on, you don’t know it but inferencing is running on AMD in the background. We look at the production instances in Microsoft running on Azure on MI 300 as well as first party applications there. Oracle brought up on MI 300. So last year was focused on a number of hyperscalers in terms of getting them to full production level.

Everyone will benefit from that because now you have a hardened Rock’n’Stack. But what we didn’t prioritize last year because we had to, I’ll say focus on the fundamentals of getting to a full production level and getting the performance attainment that we knew we could achieve. What we didn’t, do last year was maintain the software for the broad community at the rate that they need. That has been addressed in 2025. So we did in first quarter, as you said, we went instead of quarterly to literally every other week software promotions of the new changes.

AI is nothing but a constant change rate of tuning and performance improvements. By the way, look at ours or competitor, look at any of the performance of a product you release and look at that performance one year later. You’ll see it’s typically doubled or gone 2.5 x because that’s the kind of software improvements that you bring out over time. So we did that last year for our hyperscalers and we’re now doing that in parallel for the hyperscalers and the whole community. As well as a lot more communication, we’re putting out blog posts as well, documentation that we brought to bear.

So we’re really excited with now our support for the community.

Josh, Interviewer, TD Cowen: Last one on Instinct, I promise we’ll move on. Your plans for your RackScale offering are different than your competitors. I was wondering if you could maybe speak to what’s your view on the appetite and specifically what customers want from Rackscale offerings? And how is your what your plans are with ZT Systems now yours? What’s your plan for your go to market there?

And how is it different than your large competitors?

Mark Papermaster, CTO, AMD: Well, first off, should be recognized by all that RackScale is is hard. I’ve I’ve grown up with RackScale. If you look at my history, was years at IBM before I went on to take this role a dozen years ago. And when you recognize the challenges, you realize that you actually have to architect for RackScale from the very beginning. And so what we’ve done with the ZT acquisition is really strengthen our hand.

It brought in 1,200 engineers that know not only how to design for RackScale, but it was part of a design and manufacturing company, ZT Systems. We’ve just recently announced the divestiture of the manufacturing side, but those engineers, those 1,200 RackScale design engineers, they are skilled at not only designing for the highest performance, the ability to cool, whether it be air cooled, liquid cooled, deep design skill that they have. But they were brought up in a design and manufacturing house. So there everything that they think about in terms of that optimization I just described is equally with the focus on manufacturability. And that’s what’s key at RackScale is, can you manufacture in such a way that you have signal integrity that you, to all of the myriad of connection points across that cluster that you build out?

Do you have thermal management? I mean the power demands of each of these GPUs is going up dramatically because people are trying to get more compute efficiency per square foot in the data center. And so ZT really allows us to bring that design for high performance and manufacturability. And we didn’t wait until we closed. We actually put a consulting contract in place with them before we close.

They directly influenced our MI450 design for rack scale. And now they’re helping to speed that MI350 to market now that we’ve closed. Very, very key addition to the company.

Josh, Interviewer, TD Cowen: I’m going to shift gears or I guess shift frequency is probably more appropriate to the CPU side. On client in particular, your revenue has significantly outperformed your largest competitor and also a lot of third party data. A lot of that’s been from on the revenue side from ASP gains. I was wondering if you could elaborate on what’s driving the ASP change, the mix underneath, And how much room you think is still left on the PC side for those share gains and how durable those ASP gains in particular have been?

Mark Papermaster, CTO, AMD: Well, we are very much focused on revenue share gain. We don’t have a fab to fill. We’re trying to drive the best financials for the company. And so what we’ve really focused on is delivering the best performance of notebook for the longest battery life and with the top AI capability. People want to future proof their design.

If you look at the market share results, we’ve been shipping AI PCs for over two years now. And if you look, we’re number one in terms of selling these AI PCs that are actually been activated with Windows Copilot. So people are really wanting to leverage not only the technical performance capabilities but also the future proofing for workloads. And of course, Microsoft just recently announced a number of exciting changes in Copilot as Copilot becomes more pervasive across the whole office suite of applications. And so I think people are going to see it more and more as indispensable.

But that’s where we focused is on that capability of bringing the best overall capability for the best price. It’s still a great TCO play, but it’s really about the solution value that it brings. Likewise, on desktop, we’ve just got at this point a dominant share. It’s just grown dramatically because we have a daunting performance leap. When people buy a desktop, you’re buying that because you’re running your most demanding work sheets, your spreadsheets on it, your applications that are like in workstation mode, you want it right under your desk.

You’re also you might be a gamer. When you run with our high performance CPUs, you get dramatically better gaming performance. So yes, that revenue share gain is 100% attributable to the leadership roadmap that we have, and we don’t intend to slow down.

Matt Ramsay, VP of IR and Financial Strategy, AMD: Josh, maybe I could double click a couple of things there. And I’d be remiss if I didn’t say thanks to everyone in the room at TD Cowen. You guys know that I was on this stage with Mark last year doing the keynote in a little bit of a different capacity, and it’s great to see all of you guys continue to partner with your firm over time. This place meant a lot to me. So thank you for allowing us to be here.

Just to double click on the client point, and we did grow in the first quarter revenue, I think, 68% year over year in our client business. And if I was an investor, I would ask the same question, is that sustainable, right? About two thirds of that growth was ASPs, which is gaining share in much more revenue rich and margin rich parts of the market. We did grow unit share year over year. But interestingly enough, we get the question all the time, were there pull ins in your PC business ahead of COVID or ahead of COVID, ahead of tariffs?

Hey, my brain is at least functioning a little bit today. We did see a little bit of that. We’re not going to try to deny that it exists, but we’re really working hard to manage the business around that. And our units in the first quarter in our client business were actually down high teens sequentially, so more than typical seasonality. And we actually had sell through exceed sell in in our desktop business and in our client business overall.

So we’re really trying to do the best we can to manage the business, to kind of ride out some of these perturbations given all the geopolitics and tariffs that are happening. And I think it’ll some of the ASP gains are sustainable. We’ve been giving some commentary, and I think Lisa shared this on our earnings call, that we’re kind of anticipating for right now a little bit sub seasonal in the back half of the year. But if the PC market acts normal, I think we’ll be in a really, really good position to benefit from that. But right now, I think being pragmatic about the environment is sort of responsible, and that’s what we’re doing.

But all of the things that we’ve gotten and the share gains that Mark talked about in the right parts of the market, I think, are going to be with us for a bit.

Josh, Interviewer, TD Cowen: Yeah. And the other one we get as we look into the back half of the year is you’ve previously talked about expectations of margins in the second half of this year to be better than the first half of this year. I think there’s the concern is that the data center GPU franchise, you’ve been very clear, is gross margin dilutive at least for now. So what’s driving the confidence in the back half margin strength? And maybe you could talk about some of the underlying mix within these segments that’s helping support margins.

Mark Papermaster, CTO, AMD: Let me just start with a macro view and Matt, I’m sure you’ll chime in with your thoughts. But when you look at our focus on enterprise and what we’re doing across both the PC space and server, what we’re seeing, I mentioned earlier that companies are realizing that although they spent quite a bit of their wallet share on creating their base AI capability to last a year and a half, they now have to go back into refresh. So you think about commercial PCs. We’re incredibly well positioned, in terms of that value prop I just described of our performance capability, our leadership AI enablement on a PC and TCO advantage. So in commercial PCs, we’re seeing strong growth and it couldn’t have been highlighted more than Dell announcing over 22 platforms with AMD.

Dell had been a long holdout of actually adopting AMD for commercial PCs. And so now with a broader portfolio and the ability to come into those leaders that make the buying decisions across enterprise and to have that broad complement of offerings across everything from their PCs, across all of their data center needs, it positions us very well. So what we’re seeing is actually tailwinds behind us for commercial in the second half of the year of our Epic servers. I think AI has given us a bit of an unexpected tailwind. As I said, people now realize they’ve got to go back and reinvest in their CPU complexes and then likewise across commercial PCs.

Matt Ramsay, VP of IR and Financial Strategy, AMD: Josh, just to add a couple of things to what Mark mentioned. The gross margin at AMD has always been driven by mix. And the mix of the business is changing. We had to unfortunately, because of some export restrictions into China, we had to basically pull out about 1,000,000,000 in what was planned revenue for MI308 shipments into China this year. That was it was at the bottom of the margin stack on our data center GPU business.

We’re sort of reselling and now building inventory again in our console business. We had been sort of draining it for a while, and now we’re sort of shipping back in line with consumption. Our client business overall is just in aggregate larger than you would have thought when you were modeling this maybe six months ago. So there’s a lot of moving parts, I think you’ll see margins expand just a tiny bit in the back half of the year. Now I’ve been saying this in a lot of different forums, but if we do if the management team does stumble into a big AI deal, we’re gonna take it.

We’re trying to drive footprint, dollar share of gross margin dollars, which drive gross profit and free cash flow, and that’ll change the grow if the margins are different, it’ll be because the mix is drastically different of the business. But as Mark said, inside of client, inside of server, the margins are getting better within those segments because of the enterprise play.

Josh, Interviewer, TD Cowen: All right. Well, unfortunately, we’re out of time. But Mark, thank you so much for coming and kicking off the conference. Matt, thank you for, coming home. That was less weird than I thought it’d be.

Mark Papermaster, CTO, AMD: Josh, thank you, and thanks to TD Cal. Thank you, everybody here.

Josh, Interviewer, TD Cowen: Thank you.

Mark Papermaster, CTO, AMD: Thank you all.

This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.