Gold prices drop amid waning rate cut bets; central bank demand remains

Michael Burry, the man behind the “Big Short” during the Great Financial Crisis, is shorting the AI trade because he notes that hyperscalers have been depreciating their GPU chip investments for more than 3 years. He thinks that they should be doing it for under three years.

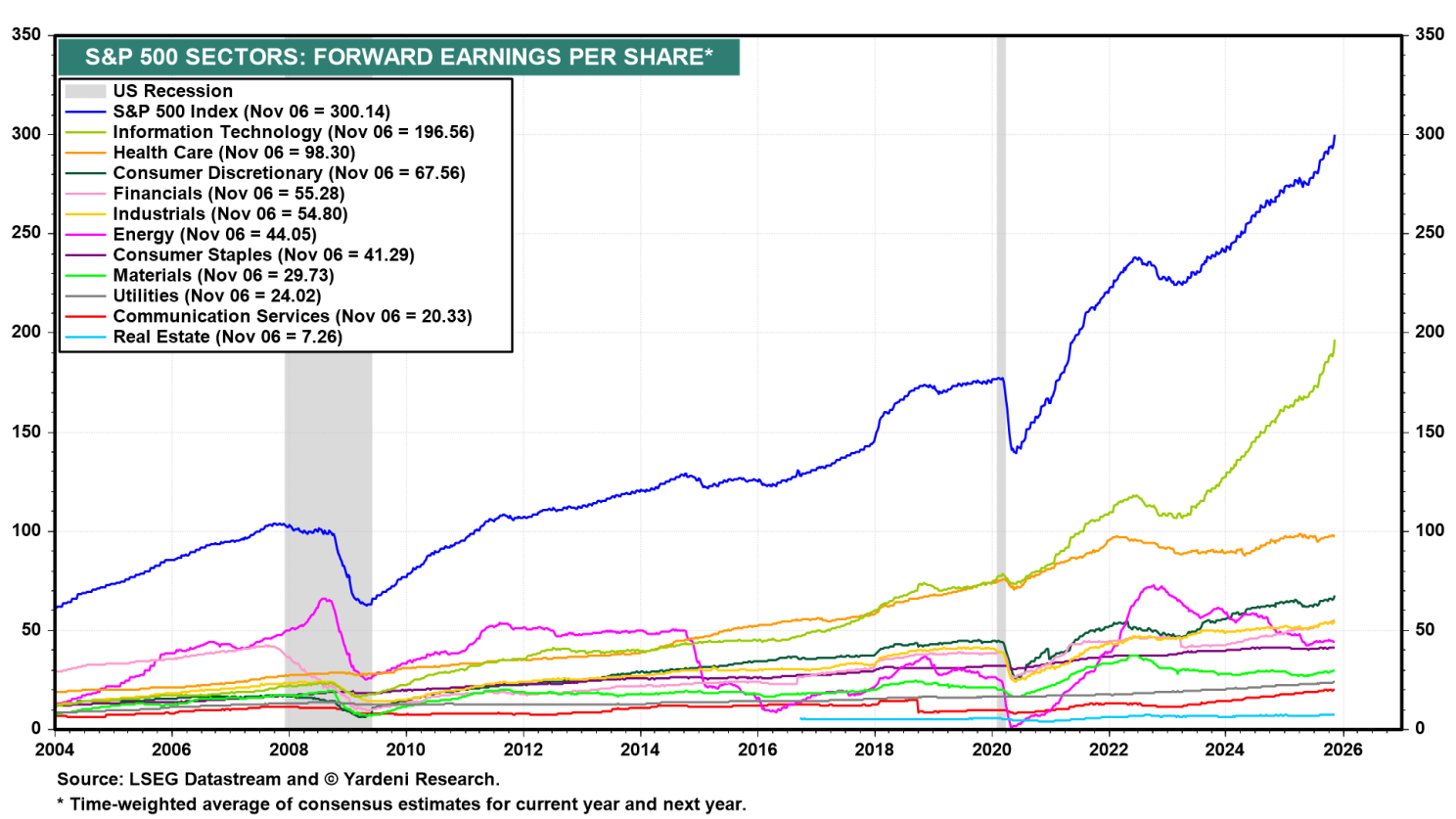

That is a reasonable concern given that the forward earnings of the S&P 500 have been led higher since the start of the Roaring 2020s by the Information Technology sector (chart).

Consider the following:

1. Complex issue

The depreciation of GPU chips by hyperscalers (like Google (NASDAQ:GOOGL), Microsoft (NASDAQ:MSFT), Meta (NASDAQ:META), and Amazon (NASDAQ:AMZN)) is a complex and current topic in financial accounting, with significant debate over the appropriate lifespan.

Hyperscalers are stretching GPU depreciation schedules, a move that lowers expenses and boosts reported earnings. Critics argue that this is aggressive accounting since GPUs often become obsolete faster.

2. The useful life debate

Many major hyperscalers publicly use an estimated useful life for their AI server equipment, including GPUs, of five to six years. This is an extension of their historical depreciation schedules for general-purpose servers, which were often around three years. Companies like Microsoft and Oracle (NYSE:ORCL) have been cited as using or factoring in a useful life of up to six years for their new AI chips/servers.

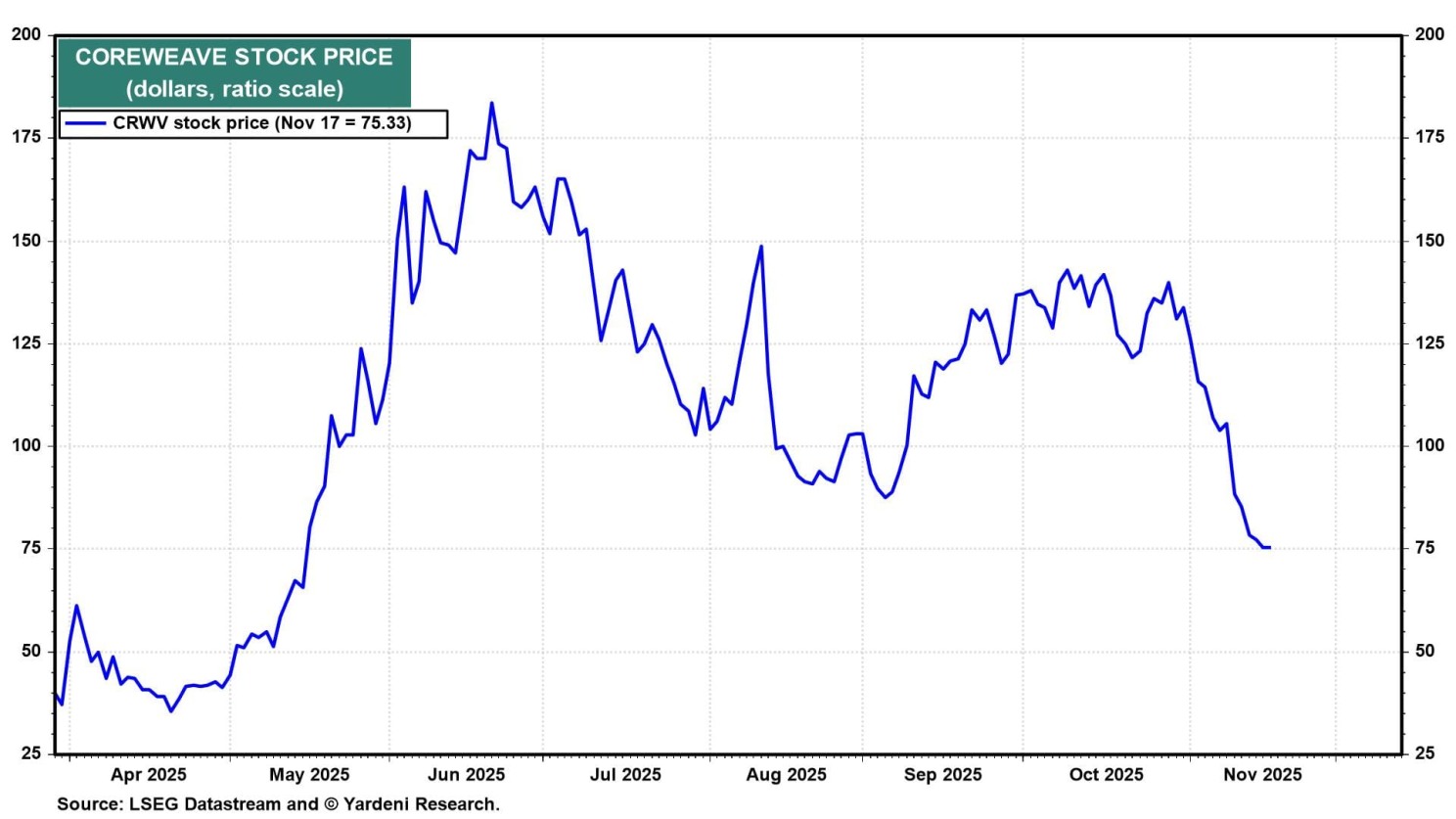

Cloud GPU rental company CoreWeave (NASDAQ:CRWV) also extended its GPU depreciation period to six years, from four years, in 2023 (chart).

Amazon (AWS) uses shorter schedules closer to four years, while Meta has pushed to extreme lengths of 11–12 years. Microsoft, Google, and Oracle generally fall in the four- to five-year range.

3. Rapid obsolescence

Critics, including some prominent investors, argue that the true economic lifespan is much shorter, perhaps one to three years. Nvidia (NASDAQ:NVDA) is now releasing new, significantly more powerful and energy-efficient AI chips (like the Blackwell and Rubin generations) on a one-year product cycle.

This rapid innovation can make older chips economically obsolete for high-end AI training workloads much faster than a five- to six-year schedule suggests. High utilization rates (60%-70%) in demanding AI workloads also contribute to faster physical degradation (chart).

4. Older chips still useful

Hyperscalers justify the longer depreciation schedule by arguing for a value cascade model. They contend that older generation GPUs, once replaced in top-tier training jobs, are simply cascaded down to power less computationally intense but high-volume inference (running the model) or other tasks, where they can still generate significant economic value for years.

They also cite continuous software and data center operational improvements that extend the hardware’s life and efficiency.

5. Bubble risk

If depreciation schedules don’t align with real-world replacement cycles, companies may be overstating their profitability and underestimating the capital-intensive nature of AI infrastructure. That would increase the chances that the AI boom is turning into an AI bubble that may be about to burst.

6. Our bottom line

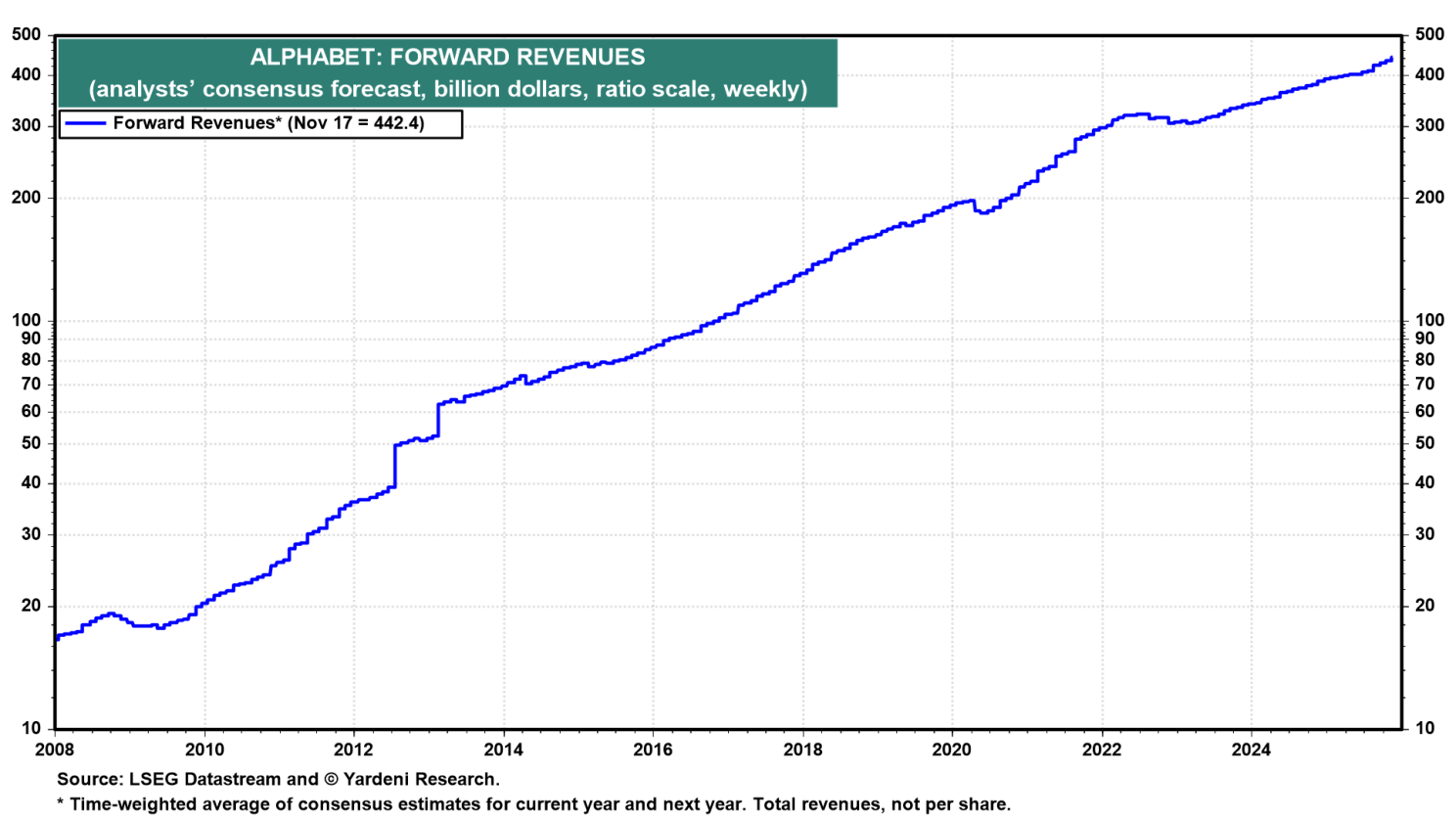

We side with the hyperscalers rather than Michael Burry in the depreciation debate. Data Centers existed before AI caught on in late 2022, when ChatGPT was first introduced. During 2021, there were as many as 4,000 of them in the US as a result of the rapidly increasing demand for cloud computing. Many are still operating with their original chips. The revenues and earnings of the hyperscalers continue to rise rapidly (chart).