Domino’s Pizza Australia surges on report Bain Capital exploring $2.6 bln buyout

When Qualcomm unveiled its new AI200 and AI250 data center accelerators this week, the market saw a familiar story: another chipmaker trying to chip away at Nvidia’s stranglehold on AI infrastructure. Qualcomm’s stock jumped 22%, its biggest single-day gain in nearly seven years, as investors cheered the news.

But beneath the headlines, a more important shift is emerging, one that could upend how investors think about the next phase of the AI boom.

The AI hardware race, long dominated by compute horsepower, may be pivoting toward something far less glamorous but potentially more decisive: memory.

Qualcomm surges 22% and it is betting that the AI chip race is shifting away from raw compute and toward memory capacity, and its massive LPDDR-based design could give it a real edge in the exploding inference market.

From Compute to Capacity: The Bottleneck No One Is Watching

For the past two years, Nvidia’s GPUs have defined the AI gold rush, their raw computational power making them indispensable for training massive models like GPT-4 and Gemini. But as AI systems move from training to deployment (a phase known as inference), the physics of performance change.

Modern AI inference workloads are increasingly memory-bound rather than compute-bound. As models grow in size and context windows expand, the challenge isn’t how fast chips can compute. It’s how quickly they can feed data to those processors.

"It’s like having a supercar stuck in traffic," says one industry analyst. "The compute cores are ready to roar, but the data highway is too narrow."

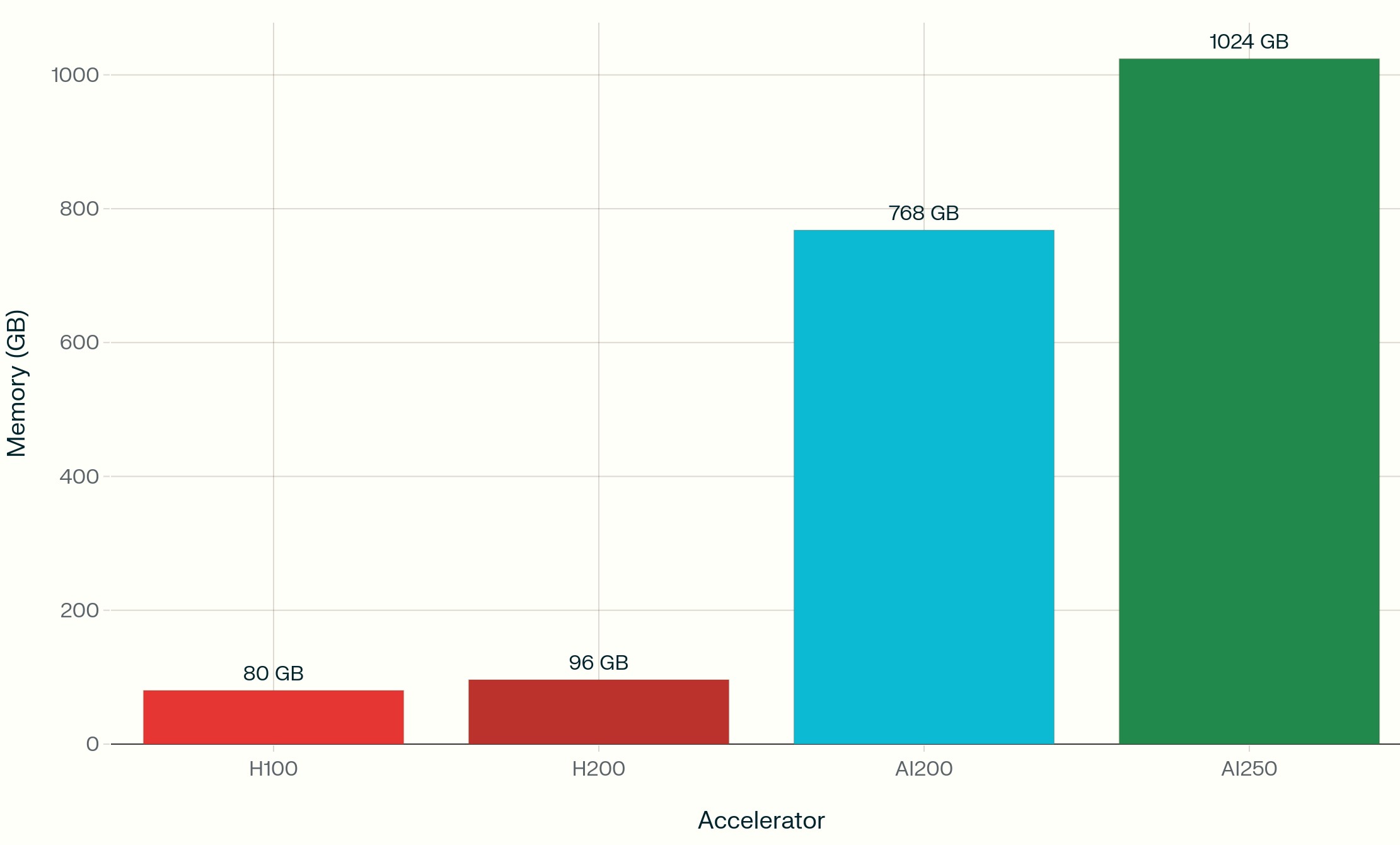

This is where Qualcomm’s bet looks different. Each of its new AI accelerator cards includes up to 768 gigabytes of LPDDR memory, about 10 times more than Nvidia’s H100 GPU configuration. Instead of using expensive High Bandwidth Memory (HBM), Qualcomm is using the low-power LPDDR technology it perfected in smartphones.

That shift is more than a cost optimization. It’s a rethinking of what matters most in AI hardware.

The "Martini Straw" Problem and the Coming Memory Revolution

Industry insiders have started calling AI’s current bottleneck the "martini straw problem": the compute engine is the glass, but the data flows through a straw. No matter how powerful the chip is, it’s limited by how quickly data can move in and out.

Qualcomm’s approach widens that straw. LPDDR memory (cheaper, denser, and more scalable) offers up to 13 times more capacity per dollar compared to HBM. That makes it possible to run large language models (LLMs) or multimodal AI inference workloads directly in memory, without constant data shuffling.

In practice, that means faster responses, lower latency, and much lower energy draw. All major advantages for data centers where power and efficiency are now critical constraints.

And the timing is right. According to research from Cambrian AI and Grand View, inference workloads will outnumber AI training by a factor of 100 to 1 by 2030. As AI applications proliferate across devices and industries, memory-rich inference could become the defining performance metric of the decade.

The Business Case: Lower Cost, Higher Efficiency, Bigger TAM

For investors, the implications are significant.

AI’s first boom phase rewarded companies that could deliver maximum compute: Nvidia, AMD, and to a lesser extent, Intel. The next wave will likely reward those who can deliver more efficient, lower-cost inference at scale.

Qualcomm’s edge lies in combining its mobile DNA (decades of building chips that run complex workloads within strict power and thermal limits) with data center-grade scalability.

Independent studies cited by the company show its Cloud AI 100 Ultra architecture consuming 20 to 35 times less power than comparable Nvidia configurations for certain inference workloads.

In a world where data center energy consumption is doubling every three years, and utilities are warning of grid constraints, those savings aren’t just nice-to-have. They’re critical.

"If inference becomes the dominant workload, and if power costs continue to climb, efficiency could matter more than peak performance," says one semiconductor analyst. "That’s the space Qualcomm knows best."

Memory per Accelerator Card

Memory capacity per AI accelerator card for Nvidia H100, Nvidia H200, Qualcomm AI200, and Qualcomm AI250, showing Qualcomm’s significant advantage, with AI200 and AI250 having an order of magnitude more memory than Nvidia’s top AI GPUs. This lends weight to the argument that memory capacity is becoming a critical bottleneck in AI inference computing.

The Bigger Picture: Shifting Battlefields in the AI Arms Race

This shift from compute-centric to memory-centric AI could also reshape the competitive landscape.

Nvidia’s ecosystem remains unmatched for AI training, thanks to its proprietary CUDA software stack and developer lock-in. But inference (where models are deployed and scaled) is far less sticky. It’s also far more price-sensitive.

That opens the door for companies like Qualcomm to compete on new terms. Rather than building bigger GPUs, Qualcomm is optimizing for the reality that most AI models won’t be retrained every day. They’ll just need to run efficiently, everywhere.

It’s a bet that echoes a pattern from the early 2000s, when Intel’s dominance in high-performance desktops was gradually eroded by ARM-based chips optimized for mobile computing.

Today, that same philosophy (smarter, smaller, cheaper, more efficient) is migrating into the data center.

Risks and Reality Checks

Qualcomm’s path has challenges. The company lacks deep relationships in the enterprise data center space, where Nvidia’s brand and ecosystem are entrenched. Winning design wins from hyperscalers won’t be easy.

But Qualcomm isn’t going it alone. It’s partnering with Saudi-backed Humain, a sovereign AI initiative investing over $40 billion in infrastructure, providing both capital and early deployment scale.

If successful, that could offer Qualcomm a way to leapfrog traditional cloud channels and tap into a wave of sovereign AI spending outside U.S. hyperscalers’ reach.

The Direct Play: Buying Qualcomm at a Discount

At a forward P/E ratio of roughly 15-20x (depending on estimates), Qualcomm trades at a significant discount to both Nvidia’s 52-57x and AMD’s 26-27x. This valuation gap reflects market skepticism about Qualcomm’s ability to compete in data centers, but also creates asymmetric upside if the company captures even modest share of the $254 billion inference market projected by 2030.

The company’s fundamentals remain solid: 2.1% dividend yield backed by a conservative 33% payout ratio, 20 consecutive years of dividend increases, and strong free cash flow supporting both dividends and buybacks (6.7% total shareholder yield). Analysts project 11-15% earnings growth through 2026, with the AI data center entry potentially accelerating that trajectory.

The risk, however, exists. Qualcomm faces execution challenges in enterprise sales where it lacks Nvidia’s relationships, and the AI200 won’t ship until 2026. But for patient investors, the Saudi Humain anchor customer ($40+ billion commitment) and partnerships with emerging sovereign AI initiatives provide revenue visibility that de-risks the data center entry.

The Investor Takeaway

For small and mid-size investors, the signal is clear: the semiconductor story is evolving.

AI’s next trillion-dollar opportunity may not come from the next great GPU, but from solving the data bottleneck that makes existing GPUs inefficient. Qualcomm’s memory-first design could prove to be the right architecture for that future, a classic case of a challenger seeing the inflection point before the incumbents do.

With the AI inference market projected to reach $254 billion by 2030, and the edge AI market another $66 billion, Qualcomm’s positioning looks less like a side bet and more like a strategic pivot into the heart of the next computing era.

Nvidia still dominates the training race. But the inference marathon is just beginning, and Qualcomm may have chosen the smarter race to run.