Moncler shares drop 3% as Q3 sales meet forecasts, growth slows

Investing.com -- Building one gigawatt of AI data center capacity costs around $35 billion, according to Bernstein’s analysis, a figure that’s well below Nvidia’s own $50–60 billion figure given during its fiscal Q2 2026 earnings call.

The analysis, based on industry discussions and third-party infrastructure data, puts the cost per GB200/NVL72 rack at about $5.9 million—$3.4 million for compute hardware and $2.5 million for physical infrastructure.

The broker said its estimate is more consistent with comments from Broadcom and AMD of roughly $15–20 billion in addressable opportunity per GW, noting that Nvidia is likely "looking ahead to future GPU cycles when per rack and per GW costs will be higher.”

GPUs dominate spending, accounting for 39% of total capex, with Nvidia’s gross profit dollars alone representing roughly 29% of all AI data center costs.

“Given Nvidia’s ~70% gross margins, this implies that Nvidia gross profit dollars account for ~29% of total AI data center spending," analyst Stacy Rasgon said in a note. Even under lower ASIC margins, accelerated compute remains the largest single item, with ASIC-based racks offering only about 19% in total capex savings.

CPUs, meanwhile, represent about 3% of spending—roughly in line with switches—and are often bundled as add-ons in GPU-centric systems.

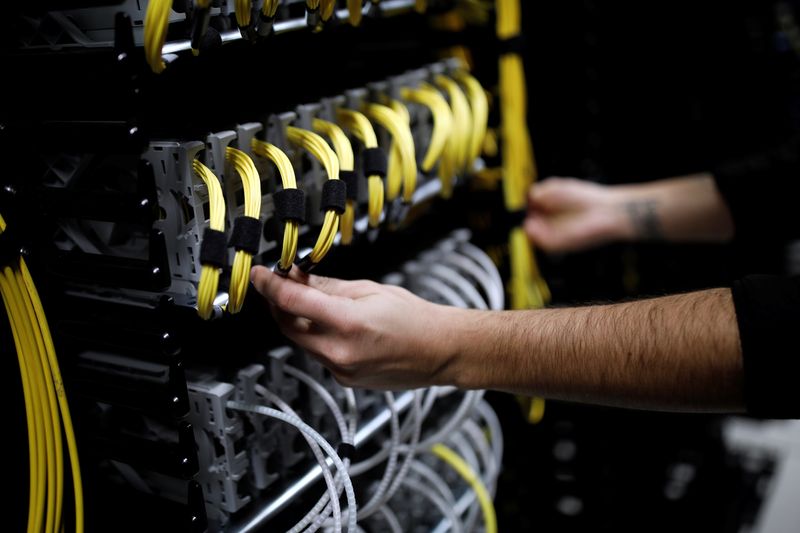

Networking is the next major cost, roughly 13% of total spend, spread across switches, cabling, network accelerators and connectors. Switches alone represent around 3% of the budget, while DPUs, NICs and copper cabling each contribute another 2–3%.

Storage, by contrast, is a minor factor at roughly 1–1.5%. A typical rack holds about two petabytes of storage, or only around $20,000 in hardware cost.

Mechanical and electrical systems take up close to one-third of total spending, according to Bernstein’s report. Major items include generators and turbines (~6%), transformers (~5%) and uninterruptible power supplies (~4%).

Thermal management—split between air and liquid cooling—accounts for about 4% of costs, though Bernstein expects liquid systems to gain share as power density rises.

Foundries, memory and wafer-fab equipment makers each capture roughly 3–4% of a data center’s total capex, or about $1–1.2 billion per gigawatt. The share rises under ASIC-based architectures that yield more chips per dollar of spending.

“With GB200, foundry gets 2.5–3% of data center capex (US$1.1B/GW) and ~1% more if CPU (US$0.3B/GW) too,” Rasgon wrote. At lower ASIC margins of around 50%, the same capital can fund nearly 19% more racks, benefiting upstream suppliers.

Operating costs are modest relative to capital outlays. Power is the largest, with a gigawatt-scale site consuming about $1.3 billion in electricity annually at $0.15 per kWh. Staffing needs are minimal—some 8–10 people per 20-GW complex—making labor costs negligible.

Utility-scale power has become a key bottleneck, with OEMs such as Siemens Energy and GE Vernova ramping turbine capacity to meet surging data center demand.

Rasgon concluded that true economic costs lean even more heavily toward servers and networking, given shorter depreciation cycles and limited ongoing expenses.

The analyst sees future value accruing to suppliers positioned at bottlenecks or offering higher content, particularly as in-rack power components rise sharply—up to seven to eight times current levels—with the shift to 800-volt high-voltage designs by 2027.