Oracle stock falls after report reveals thin margins in AI cloud business

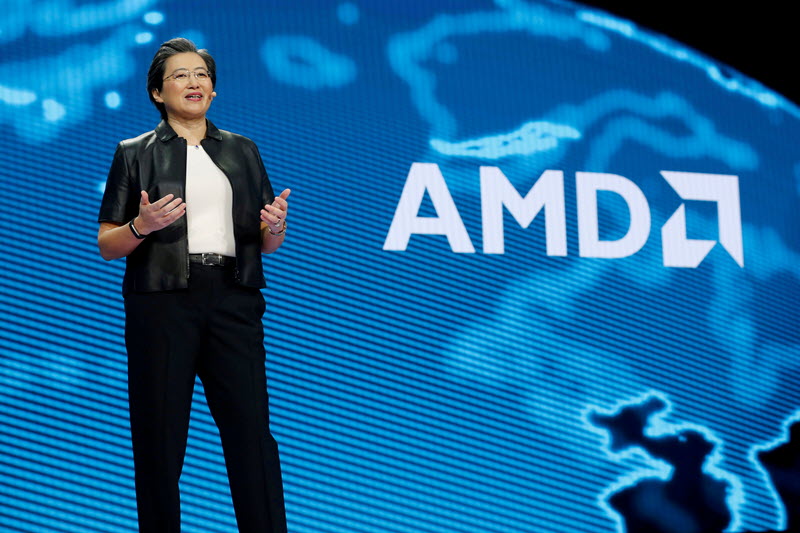

On Wednesday, 03 September 2025, Advanced Micro Devices (NASDAQ:AMD) took center stage at Citi’s 2025 Global TMT Conference, outlining its ambitious AI growth strategy while acknowledging challenges such as supply chain constraints and export controls. The company highlighted its strong financial performance and future product launches, setting the stage for continued expansion in the AI sector.

Key Takeaways

- AMD reported a 32% year-over-year increase in Q2 2024 revenue, reaching $7.7 billion.

- Projected Q3 2024 revenue is $8.7 billion, a 28% year-over-year increase.

- The MI350 GPU ramp and future MI400 and MI500 launches are pivotal to AMD’s AI strategy.

- Export controls on MI308 sales to China pose a challenge, resulting in an $800 million inventory write-off.

- AMD is actively pursuing sovereign AI opportunities with over 40 nations.

Financial Results

- Q2 2024 Revenue: $7.7 billion, representing a 32% increase from the previous year.

- Q3 2024 Revenue Guidance: Expected to reach $8.7 billion, a 28% rise year-over-year.

- Data Center Revenue: $3.2 billion in Q2 2024, a 14% increase year-over-year, despite the impact of export controls.

- MI308 Write-off: An $800 million inventory write-off due to export restrictions.

Operational Updates

- MI350 Ramp: Launched in June, with significant expansion expected in the latter half of 2024.

- Future Launches: MI400 and MI500 planned for 2025 and 2027, respectively, maintaining AMD’s AI product roadmap.

- Supply Chain Management: Addressing bottlenecks in wafer and HBM memory supply through partnerships with TSMC and other suppliers.

- Customer Engagements: Collaborations with major tech companies such as Microsoft, Meta, and Tesla, alongside new partnerships with firms like X and Sam Altman.

Future Outlook

- AI Total Addressable Market (TAM): Estimated to exceed $500 billion by 2028, with ongoing growth.

- Growth Drivers: Expansion with current clients, acquisition of new ones, and penetration into the neo cloud space.

- Sovereign AI Opportunities: Engaging with over 40 nations, with a focus on regulatory compliance and market expansion.

Q&A Highlights

- TCO Strategy: Emphasizes performance and strategic pricing to maximize market share and gross margin dollars.

- Tier 2 and Tier 3 Workloads: Utilizing smaller-scale production models to onboard customers for larger deployments.

- Industry Outlook: AMD views AI adoption as being in its early stages, justifying current capital expenditures.

Readers are encouraged to refer to the full transcript for a more detailed account of AMD’s strategic plans and discussions at the conference.

Full transcript - Citi’s 2025 Global TMT Conference:

Chris Dainley, Semiconductor Analyst, Citigroup: I’m Chris Dainley, your friendly neighborhood semiconductor analyst here at Citigroup. It’s our distinct pleasure to have AMD advanced microdevices up next. Although, is, is the rumor true? Are you gonna change it to AI microdevices, or what’s, what’s gonna happen there? Anyway, we have Jean Hu, the CFO, and Matt Ramsay, my idol, partly to successful sell side career to becoming a big mucky muck at one of the coolest, fastest growing companies out there.

He’s the VP of financial strategy and investor relations. So thanks again for coming, gang. Thank you. Let’s just

Jean Hu, CFO, AMD: Thank you. Thank you for having us.

Chris Dainley, Semiconductor Analyst, Citigroup: That’s our pleasure. So let’s just dig right into the AI business. You know, maybe talk about how the segments sort of trended this year. There’s been some volatility. We have quite a bit of growth for the AI segment in the second half of this year and next year.

Maybe just give us a timeline on how that business has gone so far, and we’ll just we’ll go from there.

Jean Hu, CFO, AMD: Yeah. I like your first question. It’s about the AI. I think first, Jason alone. First, just look at the big picture.

AMD is executing very well. When you look at the q two, we delivered 7,700,000,000.0 revenue, and that is 32% year over year increase. And we guided the q three at 8,700,000,000.0, which another 28% year over year increase. Mhmm. So all our businesses have been, like, doing really well, and the the momentum continues.

We’re really pleased with momentum. On the AI business, if you think about the data center in q two, we delivered a 3,200,000,000.0 revenue. It’s 14% a year over year increase and that’s because we had a significant impact from MI three zero eight sales to China. We couldn’t sell anything to China in q two.

Unidentified speaker: Yep.

Jean Hu, CFO, AMD: So that was the major impact. We actually had a record epic server sales. Yep. And in q three, we guided our data center revenue to be up double digit sequentially. And, primarily, it’s driven by MI three fifty ramp.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yep.

Jean Hu, CFO, AMD: We launched it in June, getting to production. In In the second half, we’re going to see the significant ramp for q three. We also exclude m I 03/2008 sales from our guidance. So without that, we are going to see year over year revenue increase increase with our data center GPU business. Of course, going into next year, we’re going to launch MI 400.

And the year after in 2027, MI 500. So we do see continued momentum for our AI business, not only in second half and going forward.

Chris Dainley, Semiconductor Analyst, Citigroup: Great. I’m sure we’ll expect more on the, four hundred and five hundred at the Analyst Day in November. I’m not above a shameless plug for one of my favorite companies. In terms of the forecasts, so in the past, you guys have given a forecast for the out year or the year, and now you’re not. Maybe just talk about the the whys of that.

Is that just because of the volatility, or or why I’m kinda shy away from the forecast?

Jean Hu, CFO, AMD: Oh, I think, if you think about it, this is the market, that has very large opportunity going forward. Mhmm. And we are literally at a very early stage. We launched our I m 300 in December 2023. So we’re at a very early stage of ramp.

Last year, of course, the first year, we were trying to provide some guidance about the direction of the business. And right now, if you think about the prospect of our business, what we’re focusing on is provide the investors some fundamental drivers of our business. And during the advancing AI event, we talk about our annual cadence of road map, the execution, the progress we’re making in networking software and the system level solutions. And during the earnings call, we did talk about the MI350 ramp, the strong customer interest, solving AI engagement. I think those are kind of fundamental drivers that will help everybody to understand the the business direction.

And as far as the revenue guidance, we’re doing one quarter at a time. It’s very dynamic. We guided the q three. We’re, very excited about second half and the next year, especially MI 400 launch next year.

Chris Dainley, Semiconductor Analyst, Citigroup: Great. We’ll take whatever we can get. Maybe just talk about the, second half drivers, and into next year. I think we have your AI business going, you know, pretty close to 10,000,000,000 next year. Is this new customers in the second half?

Is it just existing spending? We’ll get into you mentioned some sovereign growth drivers as well. But, you know, in in your own words, what are the big drivers for the AI business in the second half of this year and next year?

Jean Hu, CFO, AMD: You want to start out?

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yeah. Sure, Amit. Thanks, Chris, for having us and for everybody joining here. I I think you saw at our AI event in June the the the number of existing customers

Chris Dainley, Semiconductor Analyst, Citigroup: Yep.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Come up on stage with us. I mean, some of the big ones we’ve been talking about for a long time with with Microsoft and Meta and Oracle. There were some new customers that came and were announced at that event, whether that was Tesla or X or and and then Sam was kind enough to come on stage with Lisa and talk about the the the work that that they’re doing and collaborating with with the Helios rack and MI four hundred. So I think you should expect growth

Unidentified speaker: from our existing customers, growth from new customers, some some growth in the neo cloud space,

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: and and the expansion with the three fifty five that’s that’s ramping now from the majority of our live deployments were in inference workloads in the prior generations of of 09/2025, and that will definitely continue with some of the chiplet advantages we have in the architecture that allow us to have maybe more HBM and higher bandwidth towards the HBM, and I I think that fits well with inference. But in addition, there are everything that’s really talked about maybe in the press is is about the the latest frontier scale model. Mhmm. Right? 100,000 GPUs going to 200,000 going well beyond that.

Each of these model companies also has sort of tier two and and tier three sized models where where with the m I three fifty five breaking into production level training of these tier two and three tier three level models to transition code that might need to get to f p four for the next generation to get people familiar with the software stacks. We wouldn’t, I don’t think, want a first pass at a training model with the customer to be a frontier level model.

Unidentified speaker: Mhmm.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Right? And you you need to Lisa uses the term train to train. And I think we’re seeing traction, not just in inference, but across the customer set in these sort of tier two and tier three smaller sized, but still production level training models to get all the plumbing working and get all the they make sure that the customers are familiar with the stacks so that when we launch Helios next year and and beyond, that we’ll be positioned to compete for for much larger deployments on both the inference and

Chris Dainley, Semiconductor Analyst, Citigroup: the training side. That’s interesting. And I had actually, one of your customers or potential customers asked me about this. Do you guys see longer term the AI chip model almost being like or the business almost being like the CPU business where you’ve got various, you know, tiers and sort of multiple different SKUs all at the same time satisfying, you know, different customers at different levels? You you you expect it to to grow into a business like that eventually?

Jean Hu, CFO, AMD: We we do believe that there are going to be millions of models. Right? The foundational model, large model, media science, small size model. That’s why I think we strongly believe when you look at the AMD supplied form, we have the CPU compute and GPU and adaptive compute. Yep.

So we can actually support all kind of different size of model. That absolutely is how we are building the company for the longer term.

Chris Dainley, Semiconductor Analyst, Citigroup: Great. And so how do you sort of secure enough wafers and enough peripherals, whether it’s HBM or or what have you? Do you foresee any any issues procuring enough wafers or memory, especially going into next year when, you know, hopefully, me and everybody else’s models come through, we continue to see this impressive growth.

Jean Hu, CFO, AMD: Yeah. It’s incredible time. So when you look at the overall supply chain, there are still multiple bottlenecks. Right? Very tight capacity with advanced process node of wafers.

HBM continue to be very tight, but AMD actually has a really strong operational team and supply chain team. We are one of the largest customers over TSMC. We work with them and on cohorts on different capacity. On the memory side, it’s the same. So our team has done a lot of work to make sure we have the capacity from wafers to memory to all the components needed for rack level scale deployment

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yep.

Jean Hu, CFO, AMD: For next year to support the company’s revenue growth.

Chris Dainley, Semiconductor Analyst, Citigroup: Yeah. Yeah. And then in just looking at your latest and greatest AI TAM numbers, I think it’s gone from 400 to 500 to now it’s over 500,000,000,000. Can you maybe give us a sense of, like, how you guys come up with that number? What factors go in there?

I don’t even know if it matters because when we’re sitting down in November, it’s probably gonna go up again. But what all goes into that goes into that model?

Jean Hu, CFO, AMD: Yeah. Matt spent a lot of time on the TAM analysis. Matt?

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yeah. I think one of the first things that I did joining the company from the outside having covered AMD for a long time externally is to go find out what was in the TAM model. Right? And let let’s go let’s go look at it. Here we go.

Chris Dainley, Semiconductor Analyst, Citigroup: On the sell side question.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yeah. Exactly. It there’s a lot that goes into this. Right? There’s there’s bottom up forecasting of where we see the models going and the size of the datasets that that the customers are putting together.

There’s inference use cases across, obviously, the hyperscale arena for first party properties, but thinking about how those might get extended into vertical market industries and how AI might be applied to. I mean, I’ve said this for a long time that that I think as t goes to infinity, right, more and more CapEx and OpEx dollars in basically every industry goes into high performance computing. AI is a significant inflection of that. So to say that those are exact forecasts, they’re not. I think they’re indicative of the fact that we’re in, what, year three of this computer science that you could argue is the biggest inflection in computing since the invention of the Internet.

So I agree with that. And and and, mean, Chris, to get down to the brass tacks a little bit, though, I don’t know that we want to necessarily be in the market of of updating the TAM every two seconds. I don’t know that that’s super helpful. I I think for us as a company, we we are very, very confident this is a a explosive and large TAM. I I think the market is also agreeing with us on that given the amount of market cap around that’s being applied to this this area.

And we what we’re focused on is executing to deliver TCO to our customers and growing and being on an annual cadence and providing competition into this market over the long term and being a scaled participant in the TAM. I don’t I don’t know that we have a TAM problem. No. I I think that we we have plenty of TAM to grow into. And so turning it into turning our conversations into a TAM modeling exercise, I don’t know, is what we wanna do.

I think we’re we focus a lot on it internally, and we have very tops down and bottom up views of it. But I think Lisa’s just sort of left it open in her last comments that said it was a good bit more than 500,000,000,000 in by by 2028, and then we certainly see the market growing beyond that.

Chris Dainley, Semiconductor Analyst, Citigroup: Definitely gives us sell side or something to do and keep us busy. One thing that you guys mentioned on the previous conference call, I believe, was the sovereign growth driver, sovereign wealth funds. Maybe can you give us any sense of, you know, how big you think this could be or when you think that this could, you know, potentially start to, you know, drive material revenue growth for AMD, how you’re positioned there?

Jean Hu, CFO, AMD: Yeah. Solvent, we do think it’s a very large market opportunity. And for us, it’s actually incremental when you think about the hyperscale customer engagement that we have and the model AI company engagement that we have. We announced our collaboration with the human. Mhmm.

That is a major announcement with the multi billion dollars of opportunity. We also have more than 40 active engagements with the different nations to really address this market opportunity. I think it will be more next year because you do have the regulatory environment for solving AI. We are working with the US government closely to ensure we’re in compliance, so we get a license. Yep.

That takes some process to to get to there. But longer term, we do think it’s very large opportunity.

Chris Dainley, Semiconductor Analyst, Citigroup: And you said 40 other engagements? Four zero?

Jean Hu, CFO, AMD: Forty more than 40 active engagement globally.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: That’s pretty good. Yeah. Chris, I think just a little color there. One of my other role at the company is I I sit on our CTO, Mark Papermaster’s staff, and a lot of the work that’s being done by sort of our strategy team. And we actually have some folks that spend a lot of time at the national labs that are very senior technical people at the company and and as well as some folks that are focused on sovereign and really exploring the ways that high performance computing and supercomputing has been funded and deployed in different countries around the world and how that same mechanics might actually help deploy and give some insight into how sovereign AI rollouts are gonna happen.

So some of them, you mentioned Humane, I think Jean did in in in Saudi. There’s some other things in The Middle East where countries have access to capital and have access to electric power

Chris Dainley, Semiconductor Analyst, Citigroup: Yep.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: In ways that they may able to move quickly. And then but there’s a a big diversity of of different countries and and what their infrastructure may look like and how quickly they could potentially deploy, but the interest in having sovereign and independent compute infrastructure for nation states is is almost ubiquitous.

Unidentified speaker: Yep.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: And so we’re we’re working to certainly be just as we would with our hyperscale customers or enterprise customers. We’re working across those opportunities to to hopefully earn representative share across all of those. Great. Now in terms

Chris Dainley, Semiconductor Analyst, Citigroup: of your AI businesses, we talked about before, huge ramp, obviously, last year, then there was some volatility in the first half of this year. Some of that was the China issue. Some of that was, I guess, something else. Why do you think the business, if we take out the whole 03/2008 thingy, why do you think the business has been so volatile? And can we expect this type of volatility going forward?

Do you think it will be a little bit smoother now that the business is gaining in size and maturity, I guess, a relative basis?

Jean Hu, CFO, AMD: Yeah. I think when you look at the the first half of this year, the lumpiness is really because the export control of MI 308, you know, going back to last year because there are tremendous demand in China side, I think the whole industry is planning to meet that demand. And suddenly with export control, you really cannot ship that, and we are actually wrote off 800,000,000 inventory to address that issue. I think that is very unique of government and the policy driven lumpiness. In the longer term, I think the business itself, it’s going to scale.

We have a lot of many customers. But from a landscape perspective, we can all see the CapEx spending of larger players are much larger. Right? So the AI landscape today, the capital spending today is such you have a very large customers, and they tend to be it could be lumpy. But for us, we do feel good about the progress now and the the ramp of MI three fifty because we have many customers, not only hyperscale cloud customers, but a lot of other customers, we can diversify.

Chris Dainley, Semiconductor Analyst, Citigroup: Okay. Great. And then just to, you know, put the three zero eight issue to bed, how how do you guys do the three zero eight business? We, you know, essentially just strip it out of the model. But, you know, how do you guys see that?

Are you, you know, are you moderating your investments there? Do you anticipate some sort of continually modified ship that you’ll be able to ship to China? How does how do the executives at AMD view that type of business?

Jean Hu, CFO, AMD: Yeah. Our view has always been, you know, China is important to market. Market. We do want US AI to be populated in other countries. So we want to address that market opportunity specifically to MI 308, you know, we rolled off the inventory.

Now we have the license. The key question becomes if Chinese customers can be allowed to buy from US. So we’re dealing with the that kind of issue. Overall, definitely we’re now starting new wafers for MI 308, right, we want to just make sure we get through the inventory we have if we can sell it to Chinese customers. In the longer term, I think the way to think about it is we want to make sure we address that market.

If we can get a license for our next generation, we definitely will think about putting some work into the investment side.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Okay. Yeah. I think, Chris, the the same the knobs to sort of turn a global product into a a China compliant product are are not hugely technical. So a lot of it is around the Chinese. I think inside of China, there’s a larger demand for AI processing silicon than there is ability to manufacture that silicon in China.

So there’s a market there. Politically, we are on both sides, how we’re able to address it, we’d love to be able to support our customers there and continue to to have US technology deployed where the AI research is being done. Yep. In in that market. I think it’s there’s a lot of different nuance to to to this, but I think we’re we’re committed to supporting the customers there.

It’s just getting visibility in the short term as to what that looks like is given all the moving parts is the been the challenge.

Chris Dainley, Semiconductor Analyst, Citigroup: Great. Very helpful. And then on the AI business, a couple more questions there. So the margins are, I guess, slightly dilutive. Can you talk about why they’re slightly dilutive?

And is the plan to bring them up to the corporate average, or what should we expect there?

Jean Hu, CFO, AMD: Yeah. Thank you for the question. The gross margin of our AI business, our data center GPU business right now is below corporate average. I I think the way to think about it is the market is huge and it’s expanding so quickly. For us, the priority actually is to get the market presence, to get the market share, provide the customer better TCO.

So that really caused the gross margins slightly dilutive. But if you think about financially, we’re actually maximizing gross margin dollars as you have this kind of hyper growth market that you really want to make sure you get all the dollars that you want. Yep. Over time, we’re quite confident we’re going to be able to expand the gross margin as we scale the business. And if you think about structurally, data center business tends to have a higher than corporate average gross margin.

Unidentified speaker: Yep.

Jean Hu, CFO, AMD: But it will take some time. I think it’s really the treat of if you want to maximize your gross margin dollars or your gross margin percentage. I think everyone, you know, you will say, let’s focus on gross margin dollars.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yeah.

Chris Dainley, Semiconductor Analyst, Citigroup: Clearly, it hasn’t hurt the stock, one iota. Wanna make sure that we heard it was slightly dilutive, not, dilutive or very dilutive to hope one of my former colleagues. And then in terms of the customer concentration, how do you expect that to trend over the next few years? Do you would you expect there to be maybe a, I don’t know, a small handful, four or five or something like that driving most of the business, or do you see this really spreading out in a much longer tail? How how do you think that’s gonna trend?

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yeah. Chris, I think in the medium term, the I mean, the business will be relatively customer concentrated, right, just because of the the dollar amounts that we’re talking about people spending in CapEx. I mean, I I I imagine most of this audience has quite a few AI CapEx graphs in your inbox, and and you know that there there are some pretty big bars that make up the majority of that stack bar chart. So we I I think we’ve we’ve publicly said that we’ve or or think we have seven of the top 10 spenders as customers now. We’re engaged with a a couple others.

So we’re we think that that business will be. Now long term, right, you there’s a nuance here, Chris, around who are the invoiced customers Yep. And who are the people that consume the computing cycles. And those can be two different things just as it’s been in in the CPU cloud business where where Amazon and Google and others have rented CPU cycles to the industry through their cloud businesses. So I think through some of the the the hyperscale clouds, some of the neo clouds, there will certainly be a a a broadening out of the customer base as broader enterprise adopts AI.

And and that we see happening significantly over the next five to ten years, but the invoiced customers may still be fairly concentrated just given the dollar amounts that we’re talking about and the preplanning that needs to go into electrical infrastructure and water infrastructure. And and, I mean, you you don’t just turn up and start trying to build a 500 megawatt facility. Right? Then you need some pretty significant capital and and planning to be able to do that. So I think the the consumption customers will broaden out and diversify significantly.

The the invoice customers may still be relatively concentrated.

Chris Dainley, Semiconductor Analyst, Citigroup: Yeah. I think your two notable, I guess, competitors or other semi companies that service AI would say the exact same thing. Couple questions I get from from investors just on the sort of pricing going forward, especially into into next year. We know the die sizes are going up. Mean, can you guys leverage pricing and get better margins?

I think, Jean, on one of the conference calls, you said that, the die size is going up, but the pricing should go up about as much as the as the bomb. I just wanted to clarify any comments you’ve made in the past on, you know, what we should expect from, like, margins or pricing going forward, if there’s any changes?

Jean Hu, CFO, AMD: Yeah. If you look at each generation of our product, not only we have a more content and more capabilities, we also have a more memory. Yep. So from that perspective, the bomb is increasing. Of course, the ASP is increasing each generation.

That’s absolutely the case. And what we want to do is make sure our customers get a better TCO. Yeah. A different size of customer, of course, is very different how we price it. But in general, the way to think about it is to give a customer best TCO and also make sure we get our gross margin and the gross margin dollars.

Got it. Right? Because we’re investing aggressively to address this market. So we definitely need the gross margin to be at the level to support our investment going forward.

Chris Dainley, Semiconductor Analyst, Citigroup: Great. So it’s not, like, necessarily it’s gonna be gross margin dilutive or accretive?

Jean Hu, CFO, AMD: Yeah. Yeah. Yeah. It’s it’s priced based on how we think about the opportunities to return on investment. Yeah.

Chris Dainley, Semiconductor Analyst, Citigroup: How much of the bomb of these systems, I guess, is memory? I mean, is it, like, 20%, 30%, 50%?

Jean Hu, CFO, AMD: It’s very different for I think we never talk about in details what’s exactly the percentage, but you literally can calculate it based on how much memory we have. We actually different version, they probably have a different memory. So that’s different. Right?

Chris Dainley, Semiconductor Analyst, Citigroup: Sure. And then one of the most common questions I get is how do you see the market evolving between, you know, GPUs versus ASICs? And how do you see your share going forward? It sounds like the m I four hundred has got some pretty impressive performance statistics. Maybe we can expect to hear some at the the analyst day of them.

Yeah. I think, Chris, we’ve

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: been fairly consistent with the messaging on this topic. And and as we talked earlier in the discussion, the TAM has continued to expand. Right? And you you see we we used to talk about, I don’t know, a 100,000,000,000 in CapEx for cloud CapEx in total, and now there may be multiple individual companies spending that much. Right?

So the TAM has expanded, but I think our view of this market has still been that that programmable systems where you you you put programmable infrastructure in place that can generate TCO over the full depreciable life of the hardware based on the software innovations of the industry during that entire period of time. I think that phenomenon has served the industry well in the CPU market, will serve the industry well in the GPU and accelerated computing market. But there are customers of ours that may have individual workloads that pieces of them become a little bit more fixed, where it totally makes sense to build an ASIC. And and they probably should do and will do. And that’s the majority of the ASIC market that we see today outside of what’s happening at Google with TPU, which is a a franchise and a phenomenal one in and of itself.

So I think our view has been that 25% of this TAM will probably be served by by ASIC infrastructure and that programmable GPU led infrastructure will, in our view, serve the remainder. And and as I said, we our job is to innovate such that we bring sustained competition to that biggest part of the TAM and deliver do it in a way that allows better TCO for our customers. So if if if if we can do that, then then there’s a lot of opportunity. As as Gene said, it’ll it’s a it’s a large opportunity relative to the gross margin dollars that could come to into our p and l, but we we feel really good about where where we are, but we we got a lot to execute on as well.

Chris Dainley, Semiconductor Analyst, Citigroup: Great. I’d be remiss if I didn’t offer up any questions to the audience. Great.

Unidentified speaker: One question. First, we’re high level strategy in the IT. Like, I mentioned a lot of TCU, like, you all want to offer best TCU for customers. But TCU is a kind operation that formats and cost. Right?

I want to know that what is the how what is the best description of your mandate for that kind of strategy that is first, it’s kind of your you you offer the best performance, but with better price. The second is kind of you offer a decent or moderate performance, but with much better price. So which could best describe your strategy?

Chris Dainley, Semiconductor Analyst, Citigroup: The question was on AMD’s TCO and what Sorry. I just have to repeat that. Repeat till here.

Jean Hu, CFO, AMD: Yeah. I’ll start. Matt, you can add. Thank you for the question. I I think the way to think about the TCO is the first and the foremost is performance.

Right? If you don’t have the performance, the customer will not even consider your product. On the performance side, if you think about the AMD, we have always have a competitive advantage on inferencing side because we actually have a more memory and the bandwidth and the capacity for inferencing, that’s definitely give you much more better performance. So that is the baseline customer even talking to you. And on that front, then the key question becomes is on the ASP side, you do want to provide some ASP benefit so they can increase their total TCO.

The reason is some customers have to switch. They have a switch cost they have to incur. Some have to work on the software side. So you do need to give a customer some kind of a double digit TCO benefit to make sure. But I would say performance is the most important, and the ASP tend to be the tool in our toolbox that we want to make sure we can maximize the gross margin dollars and get more market share.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Yeah. Gene, I would the only thing I would add is I totally agree with what you’re saying. I I if if you’re selling an individual unit of something, then discounting it significantly can change economics. When you’re talking about generating TCO profit from billions of dollars investment at data center scale. I I don’t know how that math works unless you’re a performance.

And so I I think that maybe I’ll just leave it at that.

Chris Dainley, Semiconductor Analyst, Citigroup: Thanks. Anything else from the audience in the back over there, I think?

Unidentified speaker: Hey, guys. I was wondering if I could get some clarity around the comments on the tier two and three kind of workloads that you’re seeing right now from some of your customers. Can you just maybe go into more detail as to what the longer term strategy is? I understand that they’re kind of onboarding it now to get ready for MI four hundred, but is it more of a when, not if, to getting those training workloads? Just kinda curious about kind of, like, the longer term layout.

Thanks.

Matt Ramsay, VP of Financial Strategy and Investor Relations, AMD: Thank thank you for the question. I don’t know that we have a ton more detail to to give today than than we’ve given. I I think there’s there needs to be an onboarding for customers to be able to deploy training on AMD infrastructure at scale. And and those those onboardings are not just doing simulations or or tests, but actually running production workload just of a scale that’s maybe a bit smaller right now to to prime the pump. So as a as a word for for deployments in the future.

So, yeah, I don’t know if we have any additional detail or customer specifics that we can add. I mean, there’s been, I guess, you guys all saw the the customers that came to our event. They came out on stage with us and some other announcements we made. And and I think the the engagement on training is pretty broad across the customer’s set, but it’s in different phases with different folks right now. I don’t know that we have unless, Jean, you have other things to add, I I don’t know that we have too much more detail we can double click on there.

Jean Hu, CFO, AMD: I think that you covered it. I think our belief is there are going to be all kind of size of models. In the long term, AMD is going to support all of them.

Chris Dainley, Semiconductor Analyst, Citigroup: I think there was one more question in the back.

Unidentified speaker: I guess just to end with something of a general question. And I apologize I missed the very beginning. So if it was addressed, apologize. Just skip it. Can you just talk, or do you have any views on the current debate around overbuilding across the industry, overordering, etcetera?

There have been a couple of people talking about bubble forming. And, obviously, this is I’m trying to phrase it as general as possible. I was just wondering if you had any thoughts on the industry and data center expansion more generally.

Jean Hu, CFO, AMD: Yeah. I think I think Matt touched a little bit about the CapEx spending. I think when you look at the q two financial earnings report from the hyperscale companies, not only they are increasing CapEx, but also they show the tremendous evidence of AI adoption, which that have improved their return on their investment across not only their platforms, but also the productivity improvement. I think in our own company, we also see AI adoption has helped the company dramatically from performance productivity and headcount management, all those kind of things. We hear a lot of other companies adopting AI.

I think we’re still at a very early stage of AI adoption. The magnitude, how it can change we work, we live our lives, it’s very early. So our belief is at this stage, when we look at the CapEx spending, when we look at the continued capacity constraint for compute, not only on the GPU side, we actually start to see with the AI adoption, it drives the demand for general compute, which we have our CPU business. So it is very early on. I think, in the longer term, you know, there are up and downs over each cycle.

But in the long term, when you look at this AI revolution, it’s probably once a lifetime opportunity we’re seeing. And I think AMD actually is very well positioned to ensure we can address this larger opportunity in the long term.

Chris Dainley, Semiconductor Analyst, Citigroup: Perfect timing, Jean. We’re out of time. Thanks, everyone. Thank you. Thank you.

This article was generated with the support of AI and reviewed by an editor. For more information see our T&C.